move from signals to apps, add dedicated feature docs, add config toggle to menu item, undo unnecessary changes

This commit is contained in:

parent

c88dda90d4

commit

247907ef25

1

.github/workflows/ci.yml

vendored

1

.github/workflows/ci.yml

vendored

@ -78,6 +78,7 @@ jobs:

|

|||||||

${{ runner.os }}-${{ matrix.python-version }}-${{ matrix.node-version }}-collectstatic-${{ hashFiles('**/*.css', '**/*.js', 'vue/src/*') }}

|

${{ runner.os }}-${{ matrix.python-version }}-${{ matrix.node-version }}-collectstatic-${{ hashFiles('**/*.css', '**/*.js', 'vue/src/*') }}

|

||||||

|

|

||||||

- name: Django Testing project

|

- name: Django Testing project

|

||||||

|

timeout-minutes: 15

|

||||||

run: pytest --junitxml=junit/test-results-${{ matrix.python-version }}.xml

|

run: pytest --junitxml=junit/test-results-${{ matrix.python-version }}.xml

|

||||||

|

|

||||||

- name: Publish Test Results

|

- name: Publish Test Results

|

||||||

|

|||||||

@ -3,6 +3,7 @@ import traceback

|

|||||||

from django.apps import AppConfig

|

from django.apps import AppConfig

|

||||||

from django.conf import settings

|

from django.conf import settings

|

||||||

from django.db import OperationalError, ProgrammingError

|

from django.db import OperationalError, ProgrammingError

|

||||||

|

from django.db.models.signals import post_save, post_delete

|

||||||

from django_scopes import scopes_disabled

|

from django_scopes import scopes_disabled

|

||||||

|

|

||||||

from recipes.settings import DEBUG

|

from recipes.settings import DEBUG

|

||||||

@ -14,6 +15,12 @@ class CookbookConfig(AppConfig):

|

|||||||

def ready(self):

|

def ready(self):

|

||||||

import cookbook.signals # noqa

|

import cookbook.signals # noqa

|

||||||

|

|

||||||

|

if not settings.DISABLE_EXTERNAL_CONNECTORS:

|

||||||

|

from cookbook.connectors.connector_manager import ConnectorManager # Needs to be here to prevent loading race condition of oauth2 modules in models.py

|

||||||

|

handler = ConnectorManager()

|

||||||

|

post_save.connect(handler, dispatch_uid="connector_manager")

|

||||||

|

post_delete.connect(handler, dispatch_uid="connector_manager")

|

||||||

|

|

||||||

# if not settings.DISABLE_TREE_FIX_STARTUP:

|

# if not settings.DISABLE_TREE_FIX_STARTUP:

|

||||||

# # when starting up run fix_tree to:

|

# # when starting up run fix_tree to:

|

||||||

# # a) make sure that nodes are sorted when switching between sort modes

|

# # a) make sure that nodes are sorted when switching between sort modes

|

||||||

|

|||||||

@ -29,13 +29,13 @@ class ActionType(Enum):

|

|||||||

|

|

||||||

@dataclass

|

@dataclass

|

||||||

class Work:

|

class Work:

|

||||||

instance: REGISTERED_CLASSES

|

instance: REGISTERED_CLASSES | ConnectorConfig

|

||||||

actionType: ActionType

|

actionType: ActionType

|

||||||

|

|

||||||

|

|

||||||

# The way ConnectionManager works is as follows:

|

# The way ConnectionManager works is as follows:

|

||||||

# 1. On init, it starts a worker & creates a queue for 'Work'

|

# 1. On init, it starts a worker & creates a queue for 'Work'

|

||||||

# 2. Then any time its called, it verifies the type of action (create/update/delete) and if the item is of interest, pushes the Work (non blocking) to the queue.

|

# 2. Then any time its called, it verifies the type of action (create/update/delete) and if the item is of interest, pushes the Work (non-blocking) to the queue.

|

||||||

# 3. The worker consumes said work from the queue.

|

# 3. The worker consumes said work from the queue.

|

||||||

# 3.1 If the work is of type ConnectorConfig, it flushes its cache of known connectors (per space.id)

|

# 3.1 If the work is of type ConnectorConfig, it flushes its cache of known connectors (per space.id)

|

||||||

# 3.2 If work is of type REGISTERED_CLASSES, it asynchronously fires of all connectors and wait for them to finish (runtime should depend on the 'slowest' connector)

|

# 3.2 If work is of type REGISTERED_CLASSES, it asynchronously fires of all connectors and wait for them to finish (runtime should depend on the 'slowest' connector)

|

||||||

@ -50,6 +50,7 @@ class ConnectorManager:

|

|||||||

self._worker = multiprocessing.Process(target=self.worker, args=(self._queue,), daemon=True)

|

self._worker = multiprocessing.Process(target=self.worker, args=(self._queue,), daemon=True)

|

||||||

self._worker.start()

|

self._worker.start()

|

||||||

|

|

||||||

|

# Called by post save & post delete signals

|

||||||

def __call__(self, instance: Any, **kwargs) -> None:

|

def __call__(self, instance: Any, **kwargs) -> None:

|

||||||

if not isinstance(instance, self._listening_to_classes) or not hasattr(instance, "space"):

|

if not isinstance(instance, self._listening_to_classes) or not hasattr(instance, "space"):

|

||||||

return

|

return

|

||||||

@ -77,13 +78,15 @@ class ConnectorManager:

|

|||||||

|

|

||||||

@staticmethod

|

@staticmethod

|

||||||

def worker(worker_queue: JoinableQueue):

|

def worker(worker_queue: JoinableQueue):

|

||||||

|

# https://stackoverflow.com/a/10684672 Close open connections after starting a new process to prevent re-use of same connections

|

||||||

from django.db import connections

|

from django.db import connections

|

||||||

connections.close_all()

|

connections.close_all()

|

||||||

|

|

||||||

loop = asyncio.new_event_loop()

|

loop = asyncio.new_event_loop()

|

||||||

asyncio.set_event_loop(loop)

|

asyncio.set_event_loop(loop)

|

||||||

|

|

||||||

_connectors: Dict[int, List[Connector]] = dict()

|

#

|

||||||

|

_connectors_cache: Dict[int, List[Connector]] = dict()

|

||||||

|

|

||||||

while True:

|

while True:

|

||||||

try:

|

try:

|

||||||

@ -98,7 +101,7 @@ class ConnectorManager:

|

|||||||

refresh_connector_cache = isinstance(item.instance, ConnectorConfig)

|

refresh_connector_cache = isinstance(item.instance, ConnectorConfig)

|

||||||

|

|

||||||

space: Space = item.instance.space

|

space: Space = item.instance.space

|

||||||

connectors: Optional[List[Connector]] = _connectors.get(space.id)

|

connectors: Optional[List[Connector]] = _connectors_cache.get(space.id)

|

||||||

|

|

||||||

if connectors is None or refresh_connector_cache:

|

if connectors is None or refresh_connector_cache:

|

||||||

if connectors is not None:

|

if connectors is not None:

|

||||||

@ -117,9 +120,10 @@ class ConnectorManager:

|

|||||||

logging.exception(f"failed to initialize {config.name}")

|

logging.exception(f"failed to initialize {config.name}")

|

||||||

continue

|

continue

|

||||||

|

|

||||||

connectors.append(connector)

|

if connector is not None:

|

||||||

|

connectors.append(connector)

|

||||||

|

|

||||||

_connectors[space.id] = connectors

|

_connectors_cache[space.id] = connectors

|

||||||

|

|

||||||

if len(connectors) == 0 or refresh_connector_cache:

|

if len(connectors) == 0 or refresh_connector_cache:

|

||||||

worker_queue.task_done()

|

worker_queue.task_done()

|

||||||

|

|||||||

@ -11,4 +11,5 @@ def context_settings(request):

|

|||||||

'PRIVACY_URL': settings.PRIVACY_URL,

|

'PRIVACY_URL': settings.PRIVACY_URL,

|

||||||

'IMPRINT_URL': settings.IMPRINT_URL,

|

'IMPRINT_URL': settings.IMPRINT_URL,

|

||||||

'SHOPPING_MIN_AUTOSYNC_INTERVAL': settings.SHOPPING_MIN_AUTOSYNC_INTERVAL,

|

'SHOPPING_MIN_AUTOSYNC_INTERVAL': settings.SHOPPING_MIN_AUTOSYNC_INTERVAL,

|

||||||

|

'DISABLE_EXTERNAL_CONNECTORS': settings.DISABLE_EXTERNAL_CONNECTORS,

|

||||||

}

|

}

|

||||||

|

|||||||

@ -4,12 +4,11 @@ from django.conf import settings

|

|||||||

from django.contrib.auth.models import User

|

from django.contrib.auth.models import User

|

||||||

from django.contrib.postgres.search import SearchVector

|

from django.contrib.postgres.search import SearchVector

|

||||||

from django.core.cache import caches

|

from django.core.cache import caches

|

||||||

from django.db.models.signals import post_save, post_delete

|

from django.db.models.signals import post_save

|

||||||

from django.dispatch import receiver

|

from django.dispatch import receiver

|

||||||

from django.utils import translation

|

from django.utils import translation

|

||||||

from django_scopes import scope, scopes_disabled

|

from django_scopes import scope, scopes_disabled

|

||||||

|

|

||||||

from cookbook.connectors.connector_manager import ConnectorManager

|

|

||||||

from cookbook.helper.cache_helper import CacheHelper

|

from cookbook.helper.cache_helper import CacheHelper

|

||||||

from cookbook.helper.shopping_helper import RecipeShoppingEditor

|

from cookbook.helper.shopping_helper import RecipeShoppingEditor

|

||||||

from cookbook.managers import DICTIONARY

|

from cookbook.managers import DICTIONARY

|

||||||

@ -162,9 +161,3 @@ def clear_unit_cache(sender, instance=None, created=False, **kwargs):

|

|||||||

def clear_property_type_cache(sender, instance=None, created=False, **kwargs):

|

def clear_property_type_cache(sender, instance=None, created=False, **kwargs):

|

||||||

if instance:

|

if instance:

|

||||||

caches['default'].delete(CacheHelper(instance.space).PROPERTY_TYPE_CACHE_KEY)

|

caches['default'].delete(CacheHelper(instance.space).PROPERTY_TYPE_CACHE_KEY)

|

||||||

|

|

||||||

|

|

||||||

if not settings.DISABLE_EXTERNAL_CONNECTORS:

|

|

||||||

handler = ConnectorManager()

|

|

||||||

post_save.connect(handler, dispatch_uid="connector_manager")

|

|

||||||

post_delete.connect(handler, dispatch_uid="connector_manager")

|

|

||||||

|

|||||||

@ -335,7 +335,7 @@

|

|||||||

<a class="dropdown-item" href="{% url 'view_space_manage' request.space.pk %}"><i

|

<a class="dropdown-item" href="{% url 'view_space_manage' request.space.pk %}"><i

|

||||||

class="fas fa-server fa-fw"></i> {% trans 'Space Settings' %}</a>

|

class="fas fa-server fa-fw"></i> {% trans 'Space Settings' %}</a>

|

||||||

{% endif %}

|

{% endif %}

|

||||||

{% if request.user == request.space.created_by or user.is_superuser %}

|

{% if not DISABLE_EXTERNAL_CONNECTORS and request.user == request.space.created_by or not DISABLE_EXTERNAL_CONNECTORS and user.is_superuser %}

|

||||||

<a class="dropdown-item" href="{% url 'list_connector_config' %}"><i

|

<a class="dropdown-item" href="{% url 'list_connector_config' %}"><i

|

||||||

class="fas fa-sync-alt fa-fw"></i> {% trans 'External Connectors' %}</a>

|

class="fas fa-sync-alt fa-fw"></i> {% trans 'External Connectors' %}</a>

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|||||||

@ -112,6 +112,9 @@ def recipe_last(recipe, user):

|

|||||||

def page_help(page_name):

|

def page_help(page_name):

|

||||||

help_pages = {

|

help_pages = {

|

||||||

'edit_storage': 'https://docs.tandoor.dev/features/external_recipes/',

|

'edit_storage': 'https://docs.tandoor.dev/features/external_recipes/',

|

||||||

|

'list_connector_config': 'https://docs.tandoor.dev/features/connectors/',

|

||||||

|

'new_connector_config': 'https://docs.tandoor.dev/features/connectors/',

|

||||||

|

'edit_connector_config': 'https://docs.tandoor.dev/features/connectors/',

|

||||||

'view_shopping': 'https://docs.tandoor.dev/features/shopping/',

|

'view_shopping': 'https://docs.tandoor.dev/features/shopping/',

|

||||||

'view_import': 'https://docs.tandoor.dev/features/import_export/',

|

'view_import': 'https://docs.tandoor.dev/features/import_export/',

|

||||||

'view_export': 'https://docs.tandoor.dev/features/import_export/',

|

'view_export': 'https://docs.tandoor.dev/features/import_export/',

|

||||||

|

|||||||

@ -1,5 +1,3 @@

|

|||||||

from dataclasses import dataclass

|

|

||||||

|

|

||||||

import pytest

|

import pytest

|

||||||

from django.contrib import auth

|

from django.contrib import auth

|

||||||

from mock.mock import Mock

|

from mock.mock import Mock

|

||||||

|

|||||||

@ -181,10 +181,9 @@ class FuzzyFilterMixin(ViewSetMixin, ExtendedRecipeMixin):

|

|||||||

self.queryset = self.queryset.filter(space=self.request.space).order_by(Lower('name').asc())

|

self.queryset = self.queryset.filter(space=self.request.space).order_by(Lower('name').asc())

|

||||||

query = self.request.query_params.get('query', None)

|

query = self.request.query_params.get('query', None)

|

||||||

if self.request.user.is_authenticated:

|

if self.request.user.is_authenticated:

|

||||||

fuzzy = self.request.user.searchpreference.lookup or any(

|

fuzzy = self.request.user.searchpreference.lookup or any([self.model.__name__.lower() in x for x in

|

||||||

[self.model.__name__.lower() in x for x in

|

self.request.user.searchpreference.trigram.values_list(

|

||||||

self.request.user.searchpreference.trigram.values_list(

|

'field', flat=True)])

|

||||||

'field', flat=True)])

|

|

||||||

else:

|

else:

|

||||||

fuzzy = True

|

fuzzy = True

|

||||||

|

|

||||||

@ -204,10 +203,8 @@ class FuzzyFilterMixin(ViewSetMixin, ExtendedRecipeMixin):

|

|||||||

filter |= Q(name__unaccent__icontains=query)

|

filter |= Q(name__unaccent__icontains=query)

|

||||||

|

|

||||||

self.queryset = (

|

self.queryset = (

|

||||||

self.queryset.annotate(

|

self.queryset.annotate(starts=Case(When(name__istartswith=query, then=(Value(100))),

|

||||||

starts=Case(

|

default=Value(0))) # put exact matches at the top of the result set

|

||||||

When(name__istartswith=query, then=(Value(100))),

|

|

||||||

default=Value(0))) # put exact matches at the top of the result set

|

|

||||||

.filter(filter).order_by('-starts', Lower('name').asc())

|

.filter(filter).order_by('-starts', Lower('name').asc())

|

||||||

)

|

)

|

||||||

|

|

||||||

@ -329,9 +326,8 @@ class TreeMixin(MergeMixin, FuzzyFilterMixin, ExtendedRecipeMixin):

|

|||||||

return self.annotate_recipe(queryset=super().get_queryset(), request=self.request, serializer=self.serializer_class, tree=True)

|

return self.annotate_recipe(queryset=super().get_queryset(), request=self.request, serializer=self.serializer_class, tree=True)

|

||||||

self.queryset = self.queryset.filter(space=self.request.space).order_by(Lower('name').asc())

|

self.queryset = self.queryset.filter(space=self.request.space).order_by(Lower('name').asc())

|

||||||

|

|

||||||

return self.annotate_recipe(

|

return self.annotate_recipe(queryset=self.queryset, request=self.request, serializer=self.serializer_class,

|

||||||

queryset=self.queryset, request=self.request, serializer=self.serializer_class,

|

tree=True)

|

||||||

tree=True)

|

|

||||||

|

|

||||||

@decorators.action(detail=True, url_path='move/(?P<parent>[^/.]+)', methods=['PUT'], )

|

@decorators.action(detail=True, url_path='move/(?P<parent>[^/.]+)', methods=['PUT'], )

|

||||||

@decorators.renderer_classes((TemplateHTMLRenderer, JSONRenderer))

|

@decorators.renderer_classes((TemplateHTMLRenderer, JSONRenderer))

|

||||||

@ -575,9 +571,8 @@ class FoodViewSet(viewsets.ModelViewSet, TreeMixin):

|

|||||||

pass

|

pass

|

||||||

|

|

||||||

self.queryset = super().get_queryset()

|

self.queryset = super().get_queryset()

|

||||||

shopping_status = ShoppingListEntry.objects.filter(

|

shopping_status = ShoppingListEntry.objects.filter(space=self.request.space, food=OuterRef('id'),

|

||||||

space=self.request.space, food=OuterRef('id'),

|

checked=False).values('id')

|

||||||

checked=False).values('id')

|

|

||||||

# onhand_status = self.queryset.annotate(onhand_status=Exists(onhand_users_set__in=[shared_users]))

|

# onhand_status = self.queryset.annotate(onhand_status=Exists(onhand_users_set__in=[shared_users]))

|

||||||

return self.queryset \

|

return self.queryset \

|

||||||

.annotate(shopping_status=Exists(shopping_status)) \

|

.annotate(shopping_status=Exists(shopping_status)) \

|

||||||

@ -598,9 +593,8 @@ class FoodViewSet(viewsets.ModelViewSet, TreeMixin):

|

|||||||

shared_users = list(self.request.user.get_shopping_share())

|

shared_users = list(self.request.user.get_shopping_share())

|

||||||

shared_users.append(request.user)

|

shared_users.append(request.user)

|

||||||

if request.data.get('_delete', False) == 'true':

|

if request.data.get('_delete', False) == 'true':

|

||||||

ShoppingListEntry.objects.filter(

|

ShoppingListEntry.objects.filter(food=obj, checked=False, space=request.space,

|

||||||

food=obj, checked=False, space=request.space,

|

created_by__in=shared_users).delete()

|

||||||

created_by__in=shared_users).delete()

|

|

||||||

content = {'msg': _(f'{obj.name} was removed from the shopping list.')}

|

content = {'msg': _(f'{obj.name} was removed from the shopping list.')}

|

||||||

return Response(content, status=status.HTTP_204_NO_CONTENT)

|

return Response(content, status=status.HTTP_204_NO_CONTENT)

|

||||||

|

|

||||||

@ -608,9 +602,8 @@ class FoodViewSet(viewsets.ModelViewSet, TreeMixin):

|

|||||||

unit = request.data.get('unit', None)

|

unit = request.data.get('unit', None)

|

||||||

content = {'msg': _(f'{obj.name} was added to the shopping list.')}

|

content = {'msg': _(f'{obj.name} was added to the shopping list.')}

|

||||||

|

|

||||||

ShoppingListEntry.objects.create(

|

ShoppingListEntry.objects.create(food=obj, amount=amount, unit=unit, space=request.space,

|

||||||

food=obj, amount=amount, unit=unit, space=request.space,

|

created_by=request.user)

|

||||||

created_by=request.user)

|

|

||||||

return Response(content, status=status.HTTP_204_NO_CONTENT)

|

return Response(content, status=status.HTTP_204_NO_CONTENT)

|

||||||

|

|

||||||

@decorators.action(detail=True, methods=['POST'], )

|

@decorators.action(detail=True, methods=['POST'], )

|

||||||

@ -623,11 +616,8 @@ class FoodViewSet(viewsets.ModelViewSet, TreeMixin):

|

|||||||

|

|

||||||

response = requests.get(f'https://api.nal.usda.gov/fdc/v1/food/{food.fdc_id}?api_key={FDC_API_KEY}')

|

response = requests.get(f'https://api.nal.usda.gov/fdc/v1/food/{food.fdc_id}?api_key={FDC_API_KEY}')

|

||||||

if response.status_code == 429:

|

if response.status_code == 429:

|

||||||

return JsonResponse(

|

return JsonResponse({'msg', 'API Key Rate Limit reached/exceeded, see https://api.data.gov/docs/rate-limits/ for more information. Configure your key in Tandoor using environment FDC_API_KEY variable.'}, status=429,

|

||||||

{'msg',

|

json_dumps_params={'indent': 4})

|

||||||

'API Key Rate Limit reached/exceeded, see https://api.data.gov/docs/rate-limits/ for more information. Configure your key in Tandoor using environment FDC_API_KEY variable.'},

|

|

||||||

status=429,

|

|

||||||

json_dumps_params={'indent': 4})

|

|

||||||

|

|

||||||

try:

|

try:

|

||||||

data = json.loads(response.content)

|

data = json.loads(response.content)

|

||||||

@ -883,14 +873,12 @@ class RecipePagination(PageNumberPagination):

|

|||||||

return super().paginate_queryset(queryset, request, view)

|

return super().paginate_queryset(queryset, request, view)

|

||||||

|

|

||||||

def get_paginated_response(self, data):

|

def get_paginated_response(self, data):

|

||||||

return Response(

|

return Response(OrderedDict([

|

||||||

OrderedDict(

|

('count', self.page.paginator.count),

|

||||||

[

|

('next', self.get_next_link()),

|

||||||

('count', self.page.paginator.count),

|

('previous', self.get_previous_link()),

|

||||||

('next', self.get_next_link()),

|

('results', data),

|

||||||

('previous', self.get_previous_link()),

|

]))

|

||||||

('results', data),

|

|

||||||

]))

|

|

||||||

|

|

||||||

|

|

||||||

class RecipeViewSet(viewsets.ModelViewSet):

|

class RecipeViewSet(viewsets.ModelViewSet):

|

||||||

@ -976,10 +964,9 @@ class RecipeViewSet(viewsets.ModelViewSet):

|

|||||||

|

|

||||||

def list(self, request, *args, **kwargs):

|

def list(self, request, *args, **kwargs):

|

||||||

if self.request.GET.get('debug', False):

|

if self.request.GET.get('debug', False):

|

||||||

return JsonResponse(

|

return JsonResponse({

|

||||||

{

|

'new': str(self.get_queryset().query),

|

||||||

'new': str(self.get_queryset().query),

|

})

|

||||||

})

|

|

||||||

return super().list(request, *args, **kwargs)

|

return super().list(request, *args, **kwargs)

|

||||||

|

|

||||||

def get_serializer_class(self):

|

def get_serializer_class(self):

|

||||||

@ -1149,10 +1136,8 @@ class ShoppingListEntryViewSet(viewsets.ModelViewSet):

|

|||||||

permission_classes = [(CustomIsOwner | CustomIsShared) & CustomTokenHasReadWriteScope]

|

permission_classes = [(CustomIsOwner | CustomIsShared) & CustomTokenHasReadWriteScope]

|

||||||

query_params = [

|

query_params = [

|

||||||

QueryParam(name='id', description=_('Returns the shopping list entry with a primary key of id. Multiple values allowed.'), qtype='int'),

|

QueryParam(name='id', description=_('Returns the shopping list entry with a primary key of id. Multiple values allowed.'), qtype='int'),

|

||||||

QueryParam(

|

QueryParam(name='checked', description=_('Filter shopping list entries on checked. [''true'', ''false'', ''both'', ''<b>recent</b>'']<br> - ''recent'' includes unchecked items and recently completed items.')

|

||||||

name='checked', description=_(

|

),

|

||||||

'Filter shopping list entries on checked. [''true'', ''false'', ''both'', ''<b>recent</b>'']<br> - ''recent'' includes unchecked items and recently completed items.')

|

|

||||||

),

|

|

||||||

QueryParam(name='supermarket', description=_('Returns the shopping list entries sorted by supermarket category order.'), qtype='int'),

|

QueryParam(name='supermarket', description=_('Returns the shopping list entries sorted by supermarket category order.'), qtype='int'),

|

||||||

]

|

]

|

||||||

schema = QueryParamAutoSchema()

|

schema = QueryParamAutoSchema()

|

||||||

@ -1343,28 +1328,25 @@ class CustomAuthToken(ObtainAuthToken):

|

|||||||

throttle_classes = [AuthTokenThrottle]

|

throttle_classes = [AuthTokenThrottle]

|

||||||

|

|

||||||

def post(self, request, *args, **kwargs):

|

def post(self, request, *args, **kwargs):

|

||||||

serializer = self.serializer_class(

|

serializer = self.serializer_class(data=request.data,

|

||||||

data=request.data,

|

context={'request': request})

|

||||||

context={'request': request})

|

|

||||||

serializer.is_valid(raise_exception=True)

|

serializer.is_valid(raise_exception=True)

|

||||||

user = serializer.validated_data['user']

|

user = serializer.validated_data['user']

|

||||||

if token := AccessToken.objects.filter(user=user, expires__gt=timezone.now(), scope__contains='read').filter(

|

if token := AccessToken.objects.filter(user=user, expires__gt=timezone.now(), scope__contains='read').filter(

|

||||||

scope__contains='write').first():

|

scope__contains='write').first():

|

||||||

access_token = token

|

access_token = token

|

||||||

else:

|

else:

|

||||||

access_token = AccessToken.objects.create(

|

access_token = AccessToken.objects.create(user=user, token=f'tda_{str(uuid.uuid4()).replace("-", "_")}',

|

||||||

user=user, token=f'tda_{str(uuid.uuid4()).replace("-", "_")}',

|

expires=(timezone.now() + timezone.timedelta(days=365 * 5)),

|

||||||

expires=(timezone.now() + timezone.timedelta(days=365 * 5)),

|

scope='read write app')

|

||||||

scope='read write app')

|

return Response({

|

||||||

return Response(

|

'id': access_token.id,

|

||||||

{

|

'token': access_token.token,

|

||||||

'id': access_token.id,

|

'scope': access_token.scope,

|

||||||

'token': access_token.token,

|

'expires': access_token.expires,

|

||||||

'scope': access_token.scope,

|

'user_id': access_token.user.pk,

|

||||||

'expires': access_token.expires,

|

'test': user.pk

|

||||||

'user_id': access_token.user.pk,

|

})

|

||||||

'test': user.pk

|

|

||||||

})

|

|

||||||

|

|

||||||

|

|

||||||

class RecipeUrlImportView(APIView):

|

class RecipeUrlImportView(APIView):

|

||||||

@ -1393,71 +1375,61 @@ class RecipeUrlImportView(APIView):

|

|||||||

url = serializer.validated_data.get('url', None)

|

url = serializer.validated_data.get('url', None)

|

||||||

data = unquote(serializer.validated_data.get('data', None))

|

data = unquote(serializer.validated_data.get('data', None))

|

||||||

if not url and not data:

|

if not url and not data:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'error': True,

|

||||||

'error': True,

|

'msg': _('Nothing to do.')

|

||||||

'msg': _('Nothing to do.')

|

}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

}, status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

|

|

||||||

elif url and not data:

|

elif url and not data:

|

||||||

if re.match('^(https?://)?(www\\.youtube\\.com|youtu\\.be)/.+$', url):

|

if re.match('^(https?://)?(www\\.youtube\\.com|youtu\\.be)/.+$', url):

|

||||||

if validators.url(url, public=True):

|

if validators.url(url, public=True):

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'recipe_json': get_from_youtube_scraper(url, request),

|

||||||

'recipe_json': get_from_youtube_scraper(url, request),

|

'recipe_images': [],

|

||||||

'recipe_images': [],

|

}, status=status.HTTP_200_OK)

|

||||||

}, status=status.HTTP_200_OK)

|

|

||||||

if re.match(

|

if re.match(

|

||||||

'^(.)*/view/recipe/[0-9]+/[0-9a-f]{8}-[0-9a-f]{4}-[1-5][0-9a-f]{3}-[89ab][0-9a-f]{3}-[0-9a-f]{12}$',

|

'^(.)*/view/recipe/[0-9]+/[0-9a-f]{8}-[0-9a-f]{4}-[1-5][0-9a-f]{3}-[89ab][0-9a-f]{3}-[0-9a-f]{12}$',

|

||||||

url):

|

url):

|

||||||

recipe_json = requests.get(

|

recipe_json = requests.get(

|

||||||

url.replace('/view/recipe/', '/api/recipe/').replace(

|

url.replace('/view/recipe/', '/api/recipe/').replace(re.split('/view/recipe/[0-9]+', url)[1],

|

||||||

re.split('/view/recipe/[0-9]+', url)[1],

|

'') + '?share=' +

|

||||||

'') + '?share=' +

|

|

||||||

re.split('/view/recipe/[0-9]+', url)[1].replace('/', '')).json()

|

re.split('/view/recipe/[0-9]+', url)[1].replace('/', '')).json()

|

||||||

recipe_json = clean_dict(recipe_json, 'id')

|

recipe_json = clean_dict(recipe_json, 'id')

|

||||||

serialized_recipe = RecipeExportSerializer(data=recipe_json, context={'request': request})

|

serialized_recipe = RecipeExportSerializer(data=recipe_json, context={'request': request})

|

||||||

if serialized_recipe.is_valid():

|

if serialized_recipe.is_valid():

|

||||||

recipe = serialized_recipe.save()

|

recipe = serialized_recipe.save()

|

||||||

if validators.url(recipe_json['image'], public=True):

|

if validators.url(recipe_json['image'], public=True):

|

||||||

recipe.image = File(

|

recipe.image = File(handle_image(request,

|

||||||

handle_image(

|

File(io.BytesIO(requests.get(recipe_json['image']).content),

|

||||||

request,

|

name='image'),

|

||||||

File(

|

filetype=pathlib.Path(recipe_json['image']).suffix),

|

||||||

io.BytesIO(requests.get(recipe_json['image']).content),

|

name=f'{uuid.uuid4()}_{recipe.pk}{pathlib.Path(recipe_json["image"]).suffix}')

|

||||||

name='image'),

|

|

||||||

filetype=pathlib.Path(recipe_json['image']).suffix),

|

|

||||||

name=f'{uuid.uuid4()}_{recipe.pk}{pathlib.Path(recipe_json["image"]).suffix}')

|

|

||||||

recipe.save()

|

recipe.save()

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'link': request.build_absolute_uri(reverse('view_recipe', args={recipe.pk}))

|

||||||

'link': request.build_absolute_uri(reverse('view_recipe', args={recipe.pk}))

|

}, status=status.HTTP_201_CREATED)

|

||||||

}, status=status.HTTP_201_CREATED)

|

|

||||||

else:

|

else:

|

||||||

try:

|

try:

|

||||||

if validators.url(url, public=True):

|

if validators.url(url, public=True):

|

||||||

scrape = scrape_me(url_path=url, wild_mode=True)

|

scrape = scrape_me(url_path=url, wild_mode=True)

|

||||||

|

|

||||||

else:

|

else:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'error': True,

|

||||||

'error': True,

|

'msg': _('Invalid Url')

|

||||||

'msg': _('Invalid Url')

|

}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

}, status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

except NoSchemaFoundInWildMode:

|

except NoSchemaFoundInWildMode:

|

||||||

pass

|

pass

|

||||||

except requests.exceptions.ConnectionError:

|

except requests.exceptions.ConnectionError:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'error': True,

|

||||||

'error': True,

|

'msg': _('Connection Refused.')

|

||||||

'msg': _('Connection Refused.')

|

}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

}, status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

except requests.exceptions.MissingSchema:

|

except requests.exceptions.MissingSchema:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'error': True,

|

||||||

'error': True,

|

'msg': _('Bad URL Schema.')

|

||||||

'msg': _('Bad URL Schema.')

|

}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

}, status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

else:

|

else:

|

||||||

try:

|

try:

|

||||||

data_json = json.loads(data)

|

data_json = json.loads(data)

|

||||||

@ -1473,18 +1445,16 @@ class RecipeUrlImportView(APIView):

|

|||||||

scrape = text_scraper(text=data, url=found_url)

|

scrape = text_scraper(text=data, url=found_url)

|

||||||

|

|

||||||

if scrape:

|

if scrape:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'recipe_json': helper.get_from_scraper(scrape, request),

|

||||||

'recipe_json': helper.get_from_scraper(scrape, request),

|

'recipe_images': list(dict.fromkeys(get_images_from_soup(scrape.soup, url))),

|

||||||

'recipe_images': list(dict.fromkeys(get_images_from_soup(scrape.soup, url))),

|

}, status=status.HTTP_200_OK)

|

||||||

}, status=status.HTTP_200_OK)

|

|

||||||

|

|

||||||

else:

|

else:

|

||||||

return Response(

|

return Response({

|

||||||

{

|

'error': True,

|

||||||

'error': True,

|

'msg': _('No usable data could be found.')

|

||||||

'msg': _('No usable data could be found.')

|

}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

}, status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

else:

|

else:

|

||||||

return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)

|

return Response(serializer.errors, status=status.HTTP_400_BAD_REQUEST)

|

||||||

|

|

||||||

@ -1576,9 +1546,8 @@ def import_files(request):

|

|||||||

|

|

||||||

return Response({'import_id': il.pk}, status=status.HTTP_200_OK)

|

return Response({'import_id': il.pk}, status=status.HTTP_200_OK)

|

||||||

except NotImplementedError:

|

except NotImplementedError:

|

||||||

return Response(

|

return Response({'error': True, 'msg': _('Importing is not implemented for this provider')},

|

||||||

{'error': True, 'msg': _('Importing is not implemented for this provider')},

|

status=status.HTTP_400_BAD_REQUEST)

|

||||||

status=status.HTTP_400_BAD_REQUEST)

|

|

||||||

else:

|

else:

|

||||||

return Response({'error': True, 'msg': form.errors}, status=status.HTTP_400_BAD_REQUEST)

|

return Response({'error': True, 'msg': form.errors}, status=status.HTTP_400_BAD_REQUEST)

|

||||||

|

|

||||||

@ -1654,9 +1623,8 @@ def get_recipe_file(request, recipe_id):

|

|||||||

@group_required('user')

|

@group_required('user')

|

||||||

def sync_all(request):

|

def sync_all(request):

|

||||||

if request.space.demo or settings.HOSTED:

|

if request.space.demo or settings.HOSTED:

|

||||||

messages.add_message(

|

messages.add_message(request, messages.ERROR,

|

||||||

request, messages.ERROR,

|

_('This feature is not yet available in the hosted version of tandoor!'))

|

||||||

_('This feature is not yet available in the hosted version of tandoor!'))

|

|

||||||

return redirect('index')

|

return redirect('index')

|

||||||

|

|

||||||

monitors = Sync.objects.filter(active=True).filter(space=request.user.userspace_set.filter(active=1).first().space)

|

monitors = Sync.objects.filter(active=True).filter(space=request.user.userspace_set.filter(active=1).first().space)

|

||||||

@ -1695,9 +1663,8 @@ def share_link(request, pk):

|

|||||||

if request.space.allow_sharing and has_group_permission(request.user, ('user',)):

|

if request.space.allow_sharing and has_group_permission(request.user, ('user',)):

|

||||||

recipe = get_object_or_404(Recipe, pk=pk, space=request.space)

|

recipe = get_object_or_404(Recipe, pk=pk, space=request.space)

|

||||||

link = ShareLink.objects.create(recipe=recipe, created_by=request.user, space=request.space)

|

link = ShareLink.objects.create(recipe=recipe, created_by=request.user, space=request.space)

|

||||||

return JsonResponse(

|

return JsonResponse({'pk': pk, 'share': link.uuid,

|

||||||

{'pk': pk, 'share': link.uuid,

|

'link': request.build_absolute_uri(reverse('view_recipe', args=[pk, link.uuid]))})

|

||||||

'link': request.build_absolute_uri(reverse('view_recipe', args=[pk, link.uuid]))})

|

|

||||||

else:

|

else:

|

||||||

return JsonResponse({'error': 'sharing_disabled'}, status=403)

|

return JsonResponse({'error': 'sharing_disabled'}, status=403)

|

||||||

|

|

||||||

|

|||||||

43

docs/features/connectors.md

Normal file

43

docs/features/connectors.md

Normal file

@ -0,0 +1,43 @@

|

|||||||

|

!!! warning

|

||||||

|

Connectors are currently in a beta stage.

|

||||||

|

|

||||||

|

## Connectors

|

||||||

|

|

||||||

|

Connectors are a powerful add-on component to TandoorRecipes.

|

||||||

|

They allow for certain actions to be translated to api calls to external services.

|

||||||

|

|

||||||

|

### General Config

|

||||||

|

|

||||||

|

!!! danger

|

||||||

|

In order for this application to push data to external providers it needs to store authentication information.

|

||||||

|

Please use read only/separate accounts or app passwords wherever possible.

|

||||||

|

|

||||||

|

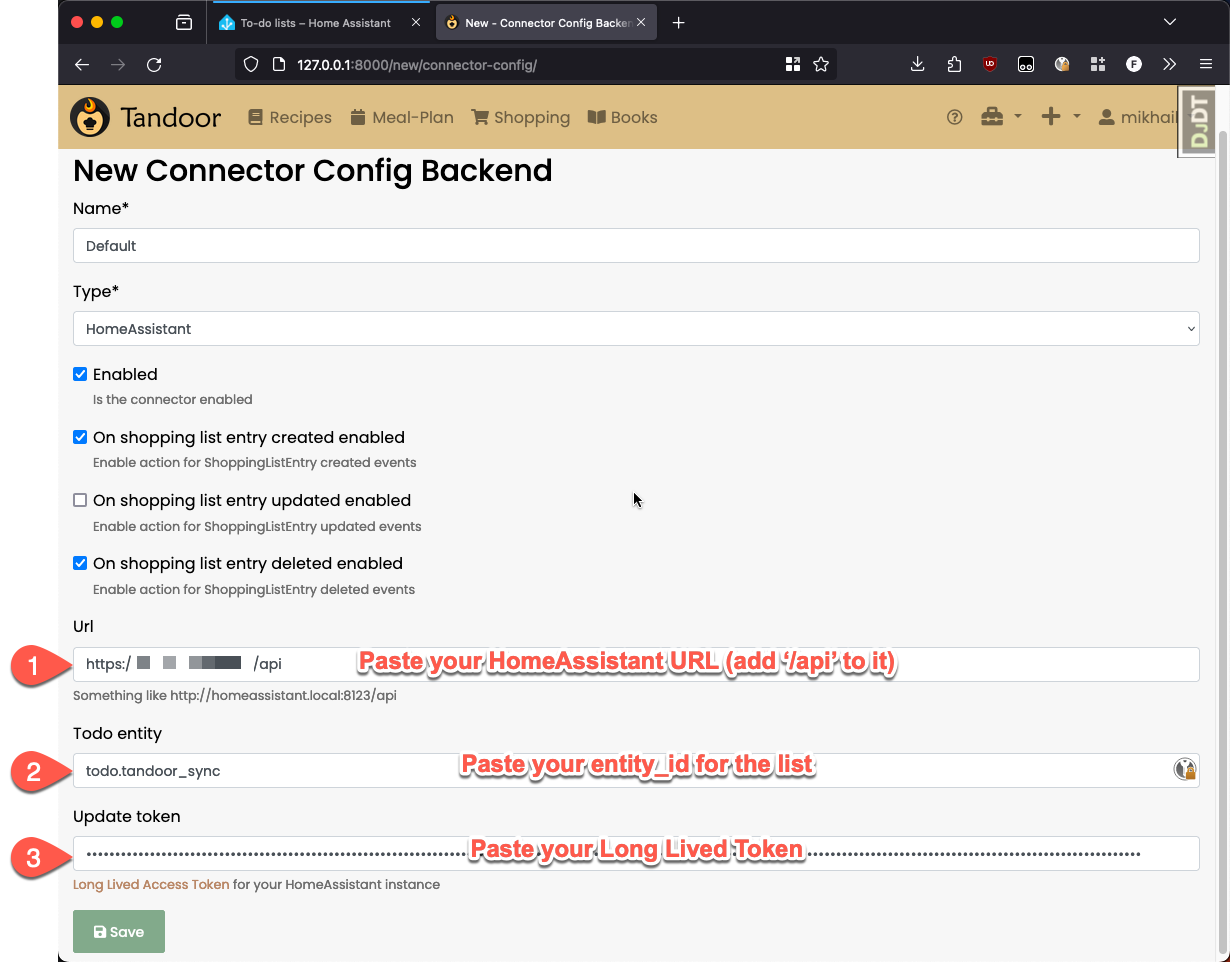

- `DISABLE_EXTERNAL_CONNECTORS` is a global switch to disable External Connectors entirely.

|

||||||

|

- `EXTERNAL_CONNECTORS_QUEUE_SIZE` is the amount of changes that are kept in memory if the worker cannot keep up.

|

||||||

|

|

||||||

|

Example Config

|

||||||

|

```env

|

||||||

|

DISABLE_EXTERNAL_CONNECTORS=0 // 0 = connectors enabled, 1 = connectors enabled

|

||||||

|

EXTERNAL_CONNECTORS_QUEUE_SIZE=100

|

||||||

|

```

|

||||||

|

|

||||||

|

## Current Connectors

|

||||||

|

|

||||||

|

### HomeAssistant

|

||||||

|

|

||||||

|

The current HomeAssistant connector supports the following features:

|

||||||

|

1. Push newly created shopping list items.

|

||||||

|

2. Pushes all shopping list items if a recipe is added to the shopping list.

|

||||||

|

3. Removed todo's from HomeAssistant IF they are unchanged and are removed through TandoorRecipes.

|

||||||

|

|

||||||

|

#### How to configure:

|

||||||

|

|

||||||

|

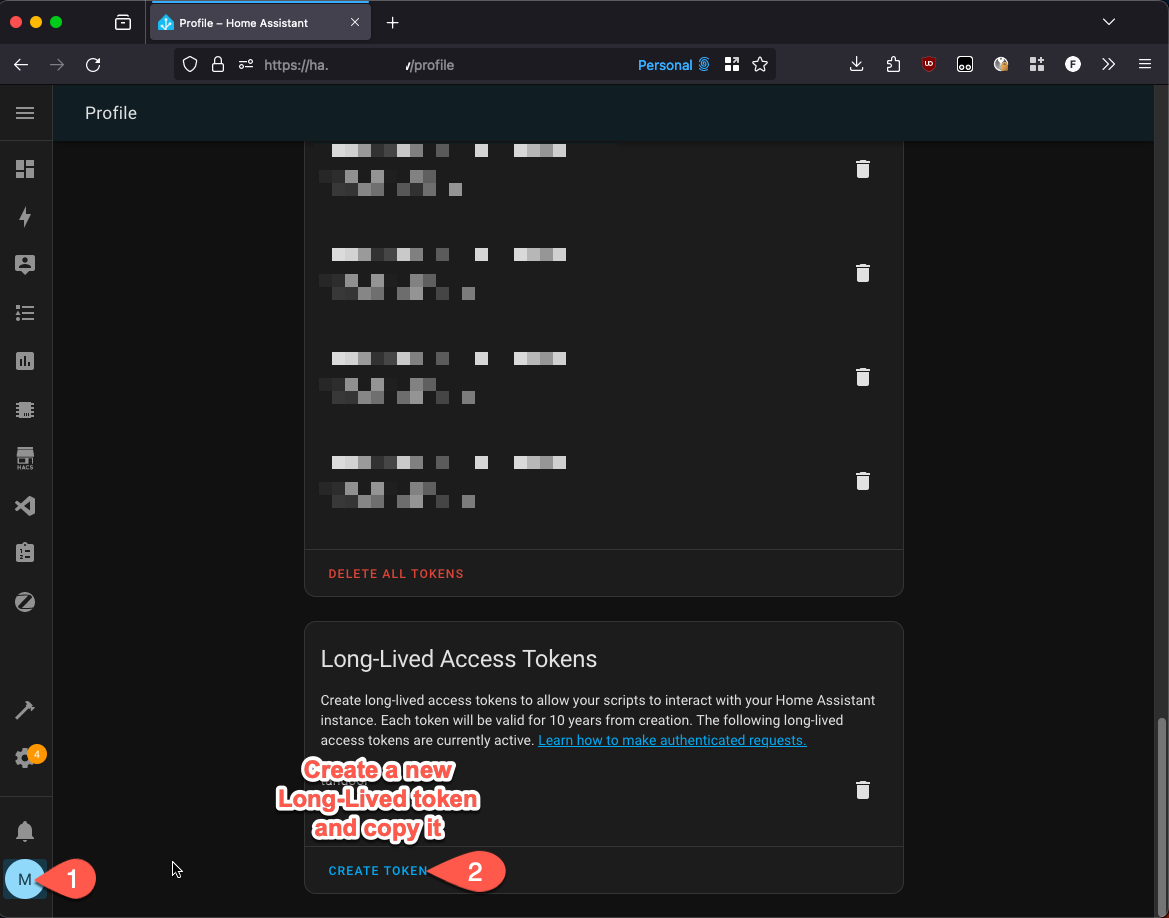

Step 1:

|

||||||

|

1. Generate a HomeAssistant Long-Lived Access Tokens

|

||||||

|

|

||||||

|

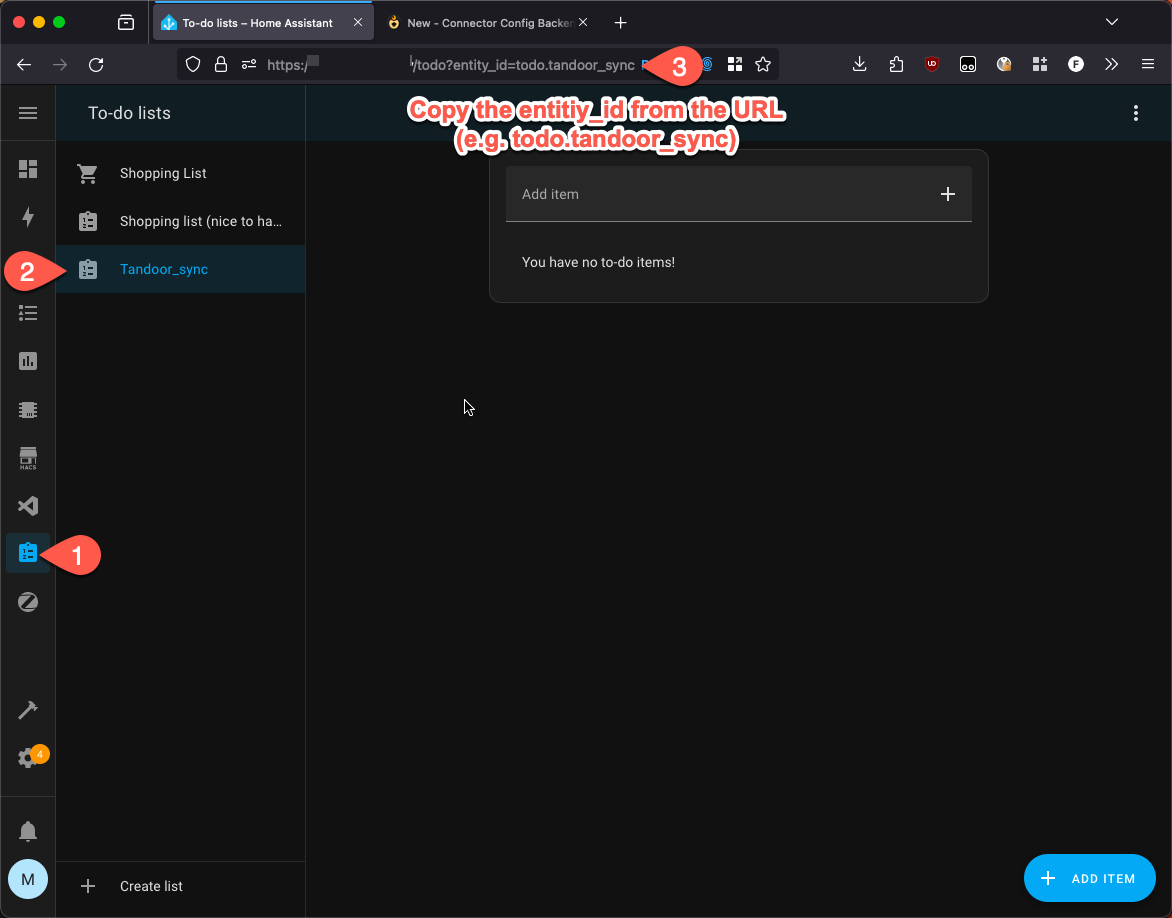

2. Get/create a todo list entry you want to sync too.

|

||||||

|

|

||||||

|

3. Create a connector

|

||||||

|

|

||||||

|

4. ???

|

||||||

|

5. Profit

|

||||||

@ -437,16 +437,6 @@ key [here](https://fdc.nal.usda.gov/api-key-signup.html).

|

|||||||

FDC_API_KEY=DEMO_KEY

|

FDC_API_KEY=DEMO_KEY

|

||||||

```

|

```

|

||||||

|

|

||||||

#### External Connectors

|

|

||||||

|

|

||||||

`DISABLE_EXTERNAL_CONNECTORS` is a global switch to disable External Connectors entirely (e.g. HomeAssistant).

|

|

||||||

`EXTERNAL_CONNECTORS_QUEUE_SIZE` is the amount of changes that are kept in memory if the worker cannot keep up.

|

|

||||||

|

|

||||||

```env

|

|

||||||

DISABLE_EXTERNAL_CONNECTORS=0 // 0 = connectors enabled, 1 = connectors enabled

|

|

||||||

EXTERNAL_CONNECTORS_QUEUE_SIZE=25

|

|

||||||

```

|

|

||||||

|

|

||||||

### Debugging/Development settings

|

### Debugging/Development settings

|

||||||

|

|

||||||

!!! warning

|

!!! warning

|

||||||

|

|||||||

@ -557,6 +557,6 @@ ACCOUNT_EMAIL_SUBJECT_PREFIX = os.getenv(

|

|||||||

'ACCOUNT_EMAIL_SUBJECT_PREFIX', '[Tandoor Recipes] ') # allauth sender prefix

|

'ACCOUNT_EMAIL_SUBJECT_PREFIX', '[Tandoor Recipes] ') # allauth sender prefix

|

||||||

|

|

||||||

DISABLE_EXTERNAL_CONNECTORS = bool(int(os.getenv('DISABLE_EXTERNAL_CONNECTORS', False)))

|

DISABLE_EXTERNAL_CONNECTORS = bool(int(os.getenv('DISABLE_EXTERNAL_CONNECTORS', False)))

|

||||||

EXTERNAL_CONNECTORS_QUEUE_SIZE = int(os.getenv('EXTERNAL_CONNECTORS_QUEUE_SIZE', 25))

|

EXTERNAL_CONNECTORS_QUEUE_SIZE = int(os.getenv('EXTERNAL_CONNECTORS_QUEUE_SIZE', 100))

|

||||||

|

|

||||||

mimetypes.add_type("text/javascript", ".js", True)

|

mimetypes.add_type("text/javascript", ".js", True)

|

||||||

|

|||||||

Loading…

Reference in New Issue

Block a user